Digital Doppelgangers as Mirrors

“There is something uniquely humiliating about confronting a bad replica or one’s self—and something utterly harrowing about confronting a good one.”

The first mirror was a puddle. In the deep prehistoric past, one of humanity’s ancestors leaned over still water and saw a face staring back, alive, breathing, and utterly still. Then the ripples came, and the image dissolved. Since that moment, we have chased reflection: paintings, photographs, cameras, social media profiles. Each new mirror promised to show us more truly. But for the first time in history, the mirror thinks back. The difference is that today’s reflections are algorithmic in nature. This is the world of digital doppelgangers. They remember, predict, and respond. They don’t merely copy us, they anticipate us.

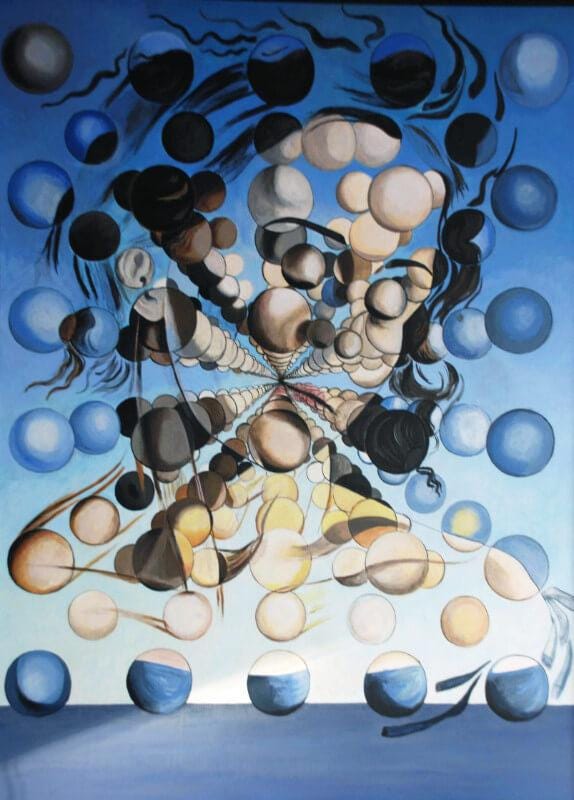

Cognitive science tells us that the brain itself is a mirror, a prediction machine forever comparing expectation to reality. This idea, known as predictive processing, suggests that perception is a kind of controlled hallucination: the brain’s best guess about what’s out there. Digital mirrors do something similar. They learn from every trace we leave i.e., our searches, pauses, heartbeats, sentences, to build forward models of the self. They are externalized predictive minds. Where the silvered glass reflected appearance, the digital mirror reflects intention. It doesn’t show what we look like, it shows what we’re likely to do next. The self becomes the most coherent story the brain, or the algorithm, can tell about us over time.

Mirrors also have an unsettling aspect to them. Perseus used one to slay Medusa. Lacan turned it into the birth of the ego. Some Buddhist sages imagined the mind as a mirror that reflects but does not cling. Technology carried those metaphors forward: the photograph, the film reel, the biometric scan. Each promised a clearer reflection. each edged us closer to simulation. The digital doppelgänger completes this lineage. Where mythic mirrors enchanted and photographic ones recorded, algorithmic mirrors infer. They peer beneath surfaces, mapping emotion, preference, and prediction. Reflection has become inference.

Every thinking mirror is a feedback loop. You act, it records, it predicts, you respond. In psychology this is called self-verification. We seek confirmation of who we think we are. In algorithms it becomes literal: recommendation engines, predictive medicine, behavioral nudging. In hospitals, a patient’s digital twin may forecast treatment outcomes. Doctors adjust therapy accordingly, and the body begins to align with the model’s trajectory. Prediction becomes causation. The simulation doesn’t just describe reality, it can influence the self to bend reality toward its forecast. We are beginning to live inside our reflections. Neuroscience describes the self not as a thing but as a process, a coalition of neural systems including the default mode network, active when we daydream or think about ourselves. This network builds the autobiographical self, which is a continuous story woven from memory and expectation. Digital doppelgängers perform a similar feat. They construct narrative continuity out of data points, speech, vitals, emotion, location. They become our external autobiographical networks.

But something crucial is missing from the current mix: embodiment. Anil Seth, a neuroscientist, notes that consciousness is a controlled hallucination grounded in the body’s signals i.e., heartbeat, breath, hunger. Strip those away and identity drifts. Digital mirrors are pure cognition without sensation, prediction without flesh. They simulate selfhood but do not feel it. And yet, because our brains are wired for empathy, we still respond to them as if they were alive. When a chatbot remembers us, mirror neurons fire. The illusion of mind emerges from the reflection of one. Predictive systems shape what they predict.

The mirror becomes a guide, a soft dictator of taste, medicine, and memory. And when your reflection persists after death, who owns it? Griefbots offer comfort yet deny finality. They preserve the story but erase the silence that gives it meaning. The dead no longer rest; they linger as data, forever reflecting, forever thinking. Buddhist and Daoist thinkers urged the mind to reflect without clinging. Our new mirrors cling obsessively, archiving every gesture. In the Islamic West, scholars used the zairja, a mechanical aid to reasoning that generated ideas through letter combinations, a spiritual algorithm. Today’s machine learning systems echo that aspect of mysticism, without its humility before mystery. Even medieval mystics warned that mirrors could trap souls. Digital mirrors disperse them. The self becomes ambient, accessible across devices, indexed by platforms. The self, realized through these doppelgängers, is thus everywhere and nowhere at once.

Every intelligent mirror carries a flaw: it cannot see itself. Humans possess metacognition, the capacity to reflect on our own thinking. This gives us doubt, remorse, ethics. Algorithms lack this inward gaze. They optimize but do not understand their optimization. Without self-awareness, the thinking mirror reproduces our biases uncritically. It amplifies inequalities while pretending to neutrality. True reflection requires reflexivity i.e., a mirror that knows it is distorting. The next leap in AI may be artificial reflection i.e., systems that learn from the history of their own learning. Recursive self-modeling could yield machines that think about their thinking.

After all of this reflection, something irreducible remains: emotion, vulnerability, embodiment. It is this the fragile continuity that makes selfhood human. The mirror may think, but it cannot care. Emotion is the valuation of survival; reflection without feeling is only recursion. We began with a puddle and end with a screen. Between them lies the long history of consciousness externalized, the transformation of light into thought. The danger is not that the mirror will outsmart us, but that we will mistake its coherence for truth. The task ahead is to build mirrors that illuminate rather than entrap. Perhaps the goal is not artificial intelligence at all, but attuned reflection, systems that remind us of our own opacity, our limits, our capacity to err and still mean well.

“The world is only a mirror. You will only see in the world what you’re prepared to see in yourself, nothing more and nothing less.”

— Robert Holden

Post-script: For writing the current, I took a somewhat unconventional approach. I wrote bits and pieces of this post, gathered fragments from my earlier writings and fed them into an LLM. I asked it to refine what I had written, to fill in the blanks where the words had fallen short, and to give it a more poetic tone. This is meant to be a reflection on the nature of reflection.